What is a robots.txt file?

Robots.txt is a crucial file that helps search engine crawlers navigate and index your website effectively. However, despite its importance, many website owners and developers do not fully understand the robots.txt file and its capabilities. In this comprehensive guide, we will explain what robots.txt is, how it works, and how you can use it to improve your website’s search engine optimization (SEO) and user experience.

What is robots.txt?

Robots.txt is a plain text file that webmasters create to instruct search engine robots or crawlers on how to crawl and index their websites. This file is typically placed in the root directory of your website and contains rules that tell search engine bots which pages or sections of your website should be crawled and indexed and which ones should not.

The robots.txt file is not mandatory, but it is highly recommended as it can help prevent search engine bots from crawling pages that are not relevant to your website’s content, improving your website’s overall SEO and user experience.

What is robots.txt?

How does robots.txt work?

When a search engine bot crawls your website, it looks for a robots.txt file in the root directory of your website. If it finds one, it reads the file and follows the instructions contained within it. The instructions in the robots.txt file can tell the bot to crawl certain sections of your website or exclude certain pages or directories from crawling and indexing.

If a search engine bot cannot find a robots.txt file, it will assume that it is allowed to crawl and index all pages on your website. However, if it finds a robots.txt file, it will follow the instructions contained within it.

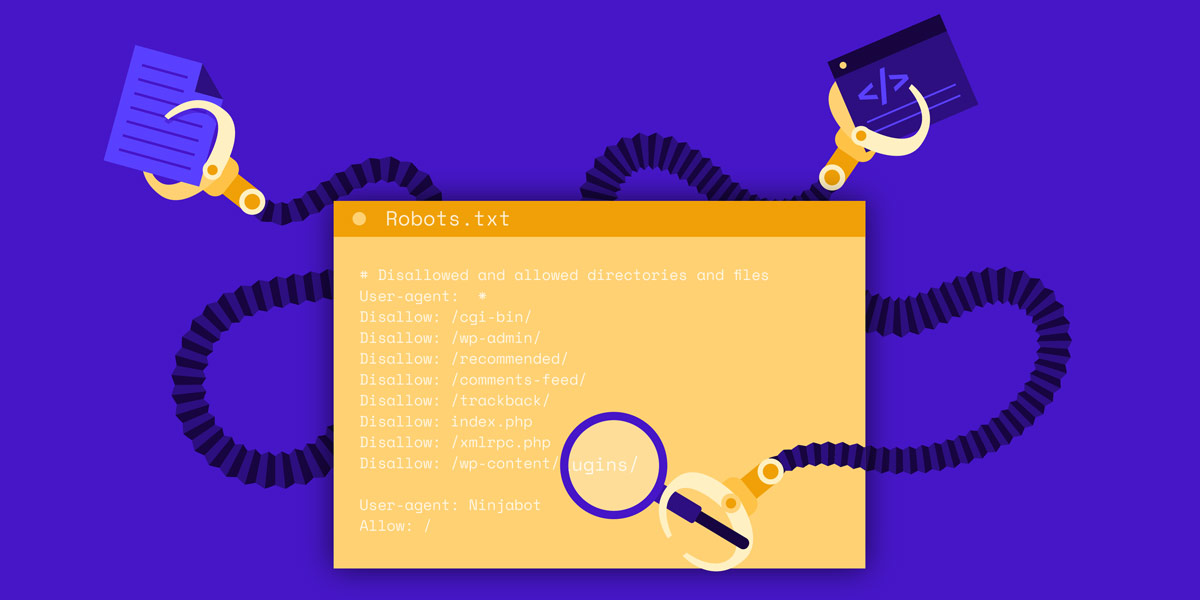

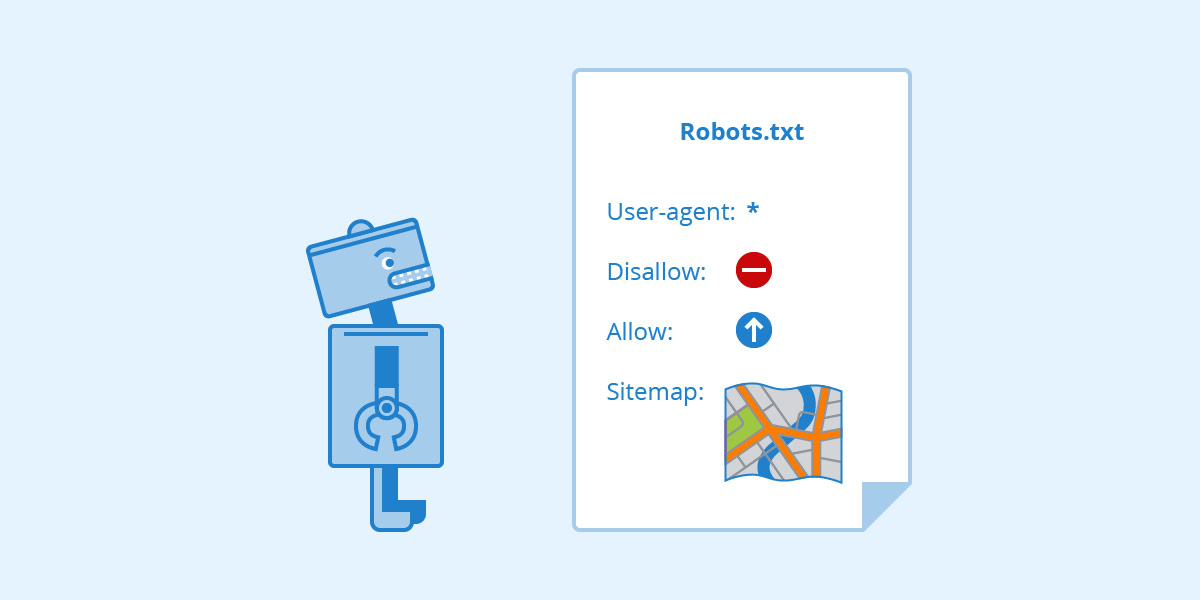

The syntax of robots.txt

The syntax of robots.txt is relatively simple and easy to understand. The file consists of one or more records, and each record contains one or more directives. A directive is a command that tells a search engine bot how to crawl and index your website.

User-agent

The User-agent directive identifies the search engine bot to which the following directives apply. You can specify multiple User-agent directives in your robots.txt file, each with its own set of instructions.

For example, if you want to block all bots from crawling a specific page, you can use the following syntax:

User-agent: * Disallow: /page-to-block

Disallow

The Disallow directive tells search engine bots not to crawl or index a specific page or directory on your website. You can use this directive to block bots from accessing pages that are not relevant to your website’s content or to prevent duplicate content issues.

For example, if you want to prevent search engine bots from crawling a specific directory on your website, you can use the following syntax:

User-agent: * Disallow: /directory-to-block/

Allow

The Allow directive tells search engine bots which pages or directories they are allowed to crawl and index, even if they are located in a directory that has been disallowed. You can use this directive to allow bots to crawl pages that you want to be indexed, but that may be located in a directory that has been disallowed.

For example, if you want to allow search engine bots to crawl a specific page that is located in a directory that has been disallowed, you can use the following syntax:

User-agent: * Disallow: /directory-to-block/ Allow: /directory-to-allow/page-to-allow.html

Sitemap

The Sitemap directive tells search engine bots the location of your sitemap file, which provides a list of all the pages on your website that you want to be indexed. Including a sitemap in your robots.txt file can help search engine bots crawl and index your website more efficiently.

For example, if you have a sitemap file located at https://www.example.com/sitemap.xml, you can use the following syntax:

Sitemap: https://www.example.com/sitemap.xml

Crawl-delay

The Crawl-delay directive tells search engine bots to wait a certain amount of time before crawling your website. This directive can be useful if you want to limit the amount of server resources used by search engine bots.

For example, if you want to tell search engine bots to wait 10 seconds before crawling your website, you can use the following syntax:

User-agent: * Crawl-delay: 10

Wildcards

Wildcards can be used in robots.txt to match multiple URLs. The * character is used to match any string of characters, while the $ character is used to match the end of a URL.

For example, if you want to block all URLs that end with .pdf, you can use the following syntax:

User-agent: * Disallow: /*.pdf$

The syntax of robots.txt

Best practices for robots.txt

To ensure that your robots.txt file is effective and does not harm your website’s SEO, follow these best practices:

Do not use robots.txt to hide sensitive information

Using robots.txt to hide sensitive information from search engine bots is not effective, as it only prevents bots from crawling and indexing the information, but it does not prevent the information from being accessed through other means.

Use robots.txt to block harmful bots

If you notice that certain bots are causing issues on your website, such as excessive crawling or spamming, you can use robots.txt to block them from accessing your website.

Be specific when disallowing pages

When using the Disallow directive, be specific and only block the pages or directories that you do not want to be crawled or indexed. Blocking entire sections of your website can harm your SEO and user experience.

Use a sitemap to complement your robots.txt file

Including a sitemap in your robots.txt file can help search engine bots crawl and index your website more efficiently.

Test your robots.txt file

Before uploading your robots.txt file to your website, test it using Google’s robots.txt Tester to ensure that it is functioning correctly.

Best practices for robots.txt

Common mistakes with robots.txt

Avoid these common mistakes when creating and using your robots.txt file:

Disallowing all bots

Blocking all bots from accessing your website can harm your SEO and prevent your website from being indexed by search engines.

Blocking CSS and JavaScript files

Blocking CSS and JavaScript files can harm your website’s user experience and prevent search engine bots from properly crawling and indexing your website.

Using incorrect syntax

Using incorrect syntax in your robots.txt file can cause search engine bots to ignore the file and crawl all pages on your website.

Common mistakes with robots.txt

Conclusion

In conclusion, the robots.txt file is a crucial element of your website’s SEO and user experience. By understanding how it works and following best practices, you can ensure that search engine bots crawl and index your website correctly, while also protecting sensitive information and preventing harmful bots from accessing your website. Remember to be specific when disallowing pages and directories, and to use a sitemap to complement your robots.txt file. Testing your robots.txt file before uploading it to your website is also important to ensure that it is functioning correctly and not causing any issues.

If you are unsure about how to create or modify your robots.txt file, it is recommended that you consult with an SEO expert or web developer who can provide guidance and ensure that your robots.txt file is optimized for your website’s specific needs.

FAQs

What happens if I do not have a robots.txt file?

If you do not have a robots.txt file, search engine bots will crawl and index your website’s pages by default, unless they are instructed not to do so through other means, such as meta tags or HTTP headers.

Can robots.txt be used to improve my website’s SEO?

Yes, by using robots.txt to block harmful bots and ensure that search engine bots crawl and index your website correctly, you can improve your website’s SEO and user experience.

Can I use robots.txt to hide sensitive information from search engine bots?

No, using robots.txt to hide sensitive information from search engine bots is not effective, as it only prevents bots from crawling and indexing the information, but it does not prevent the information from being accessed through other means.

How often should I update my robots.txt file?

You should update your robots.txt file whenever you make changes to your website’s pages or directory structure, or if you want to modify how search engine bots crawl and index your website.

Can I use wildcards in robots.txt?

Yes, wildcards can be used in robots.txt to match multiple URLs. The * character is used to match any string of characters, while the $ character is used to match the end of a URL.